Recent breakthroughs suggest that AI systems could match or even surpass human clinicians on certain tasks, auguring a future with improved diagnostic accuracy and access to care. Google has led a wave of research demonstrating what’s possible. For instance, Med-PaLM 2 model – a large language model tuned for medical knowledge – was the first AI to surpass the passing score on US Medical Licensing Exam-style questions, achieving about 86.5% accuracy on a medical exam benchmark, a level approaching expert physicians.[1] Its answers to patient questions were so robust that in one study doctors preferred the AI’s responses over those written by other doctors on 8 out of 9 quality measures.[2] This was a pivotal moment: an LLM not only recalling medical facts, but demonstrating reasoning and even a passable "bedside manner" (albeit in written form).

Google has also released Med-Gemini, a next-generation family of models built on Google’s multimodal Gemini AI. In late 2024, Med-Gemini set a new record by scoring 91.1% on the MedQA USMLE benchmark, outperforming its Med-PaLM predecessor and even beating GPT-4 on every comparable medical benchmark.[3] On a set of challenging multimodal tasks (like diagnosing visual medical cases), Med-Gemini outperformed GPT-4 by an average margin of 44.5% – a staggering leap forward.[4] Google’s researchers have also demonstrated AI systems that can interpret complex medical images (even 3D MRI scans) and generate full radiology reports that meet or exceed state-of-the-art quality standards.[5]

Not without precedent

To appreciate how we arrived at this juncture, it’s worth remembering that AI in medicine isn’t new – especially in fields like radiology. In fact, radiology has been on the forefront of medical automation for decades, long before today’s neural networks made headlines. As early as the 1990s, researchers were developing software to detect tumors in medical images. The US FDA approved some of the first computer-aided detection tools for mammography in the late 1990s and early 2000s, which would automatically highlight suspicious areas on X-rays for radiologists to review. At the time, these were revolutionary, if limited, aids: essentially an extra pair of eyes for the doctor. We didn’t call them "AI"; they were often described as pattern-recognition programs or expert systems. But they were the precursors of today’s AI diagnostic tools.

Radiology as a profession has long balanced enthusiasm and skepticism about AI. There’s an apocryphal story (grounded in reality) that in 2016, deep learning pioneer Geoffrey Hinton quipped, "We should stop training radiologists now."[6] The implication was that algorithms would soon outperform humans in reading medical images. Fast forward to 2025 – radiologists are definitely still being trained and employed, and they have not been rendered obsolete. The journey of applying ML to radiology has been one of incremental progress punctuated by hype cycles. Early computer vision algorithms could detect simple patterns like microcalcifications in breast tissue or nodules in lungs, but they often fell short on accuracy or generalizability. Radiologists learned to treat these tools as a second reader, sometimes helpful, often needing improvement. What’s changed in recent years is the rise of deep learning, both in terms of theory and the availability of compute, which has caused huge leaps in performance. Modern ML-based radiology systems are able to parse subtle features in images that would evade older techniques.

What does “AI” in medicine actually mean?

We now have the habit of slapping the label "AI" on everything. Or sometimes calling this thing "ML" and that thing "AI", which is quite confusing. It's true that the math behind models has changed and the scale of the models has increased, but the core of what "AI" and "ML" are doing is the same: pattern recognition. Even today’s most advanced medical AI is still pattern recognition at its core – just on a higher scale. This historical context in radiology is a cautionary tale: we’ve seen waves of optimism for machine learning in medicine before. Each wave improved on the last, but it also taught us that integrating AI into healthcare takes time, evidence, and often a dose of humility when initial hype meets clinical reality.

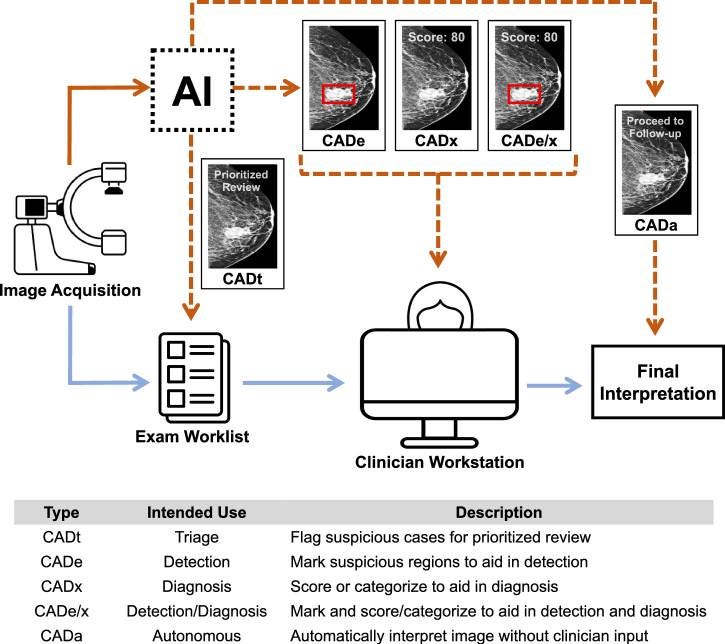

Given the breadth of technologies labeled "AI," it's important to parse what "AI" actually means in a medical context. The public – and sometimes regulators – can struggle to differentiate very different kinds of algorithmic tools. Let's break down the five main categories of AI-enabled Computer-Aided Detection (CAD) products based on their intended use in clinical workflow:[7]

• Computer-Aided Triage (CADt): These devices flag suspicious cases for prioritized review by clinicians. The core AI output is a binary indicator of whether the case is flagged or not, where flagged cases can be reviewed more quickly by a clinician. Importantly, CADt devices do not provide annotations to directly localize findings. They operate before clinician review to help prioritize cases.

• Computer-Aided Detection (CADe): These devices help detect the location of lesions by overlaying markings on images. They assist clinicians during exam interpretation by highlighting areas of concern, but do not provide diagnostic scores or probabilities.

• Computer-Aided Detection and Diagnosis (CADe/x): When a CADe device also assigns a numerical or categorical score to the detected lesion or the whole case, it becomes a CADe/x device. The additional granularity is thought to aid in diagnosis and not just detection. Like CADe, these devices assist clinicians during exam interpretation.

• Computer-Aided Diagnosis (CADx): These devices focus on diagnosis without explicitly marking the locations of lesions across the case. They provide diagnostic suggestions or probabilities that clinicians can use during their interpretation of exams.

• Computer-Aided Autonomous (CADa): This is a variation of CADx where the device is intended to automatically interpret the exam without clinician review. These systems aim to operate with minimal or no human oversight, combining multiple AI components to perform clinical tasks end-to-end.

These five CAD types vary by their outputs and how clinicians are instructed to use these outputs. CADt devices operate before clinician review to prioritize cases, while CADe, CADx, and CADe/x devices are designed to assist clinicians as they are interpreting exams. The distinction between detection and diagnosis is particularly important, as it affects both the device's capabilities and its regulatory classification.

When people talk about an "AI doctor," they might imagine this last category – a medical assistant that can talk to you, examine you, diagnose, and prescribe. In reality, most deployed medical AI today falls into the middle categories: narrow tools for detection, diagnosis support, or predictions, usually operating under a clinician's oversight. The confusion arises because if an app tells you your skin lesion "looks suspicious for melanoma," a layperson might assume the AI has effectively given a diagnosis. But a regulator might classify that app differently if it expects a dermatologist to confirm the finding.

This ambiguity isn't just academic – it matters for safety and policy. If the public believes an "AI" is handling their care, they might over-trust or under-trust it depending on their understanding. Likewise, regulators are grappling with how to set rules for these different tools. Is it a medical device or just an informational service? Should it be held to the standard of a doctor, or of a decision aid? As we'll see, answering these questions depends on defining the AI's role clearly – something that's now a priority for regulators worldwide.

Regulatory pathways

The regulatory landscape for these tools in medicine is quickly evolving, and it differs significantly between the United States and the European Union. In the US, the FDA oversees medical AI under its existing medical device framework (which covers software as a medical device). To date, the FDA has reviewed and authorized over 690 AI/ML-enabled medical devices for clinical use.[8] This number might surprise people – it shows that a lot of AI is already cleared for use in healthcare, everything from AI that helps radiologists detect cancers to algorithms that assist in managing insulin dosing. How does the FDA decide what level of scrutiny to apply? It hinges on intended use and risk.

The more the AI is intended to support (with a human in the loop making final calls), the more regulators can be flexible and use existing pathways. The more the AI is intended to diagnose or act autonomously, the closer it gets to the standard of care that a human provider would be held to – and thus the stricter the regulatory pathway. The FDA has been actively refining guidelines in this space. It has issued discussion papers on how to handle AI that can learn and adapt (continuously learning systems), and even how to regulate AI used in drug development. (Notably, in 2023 the FDA released a draft guidance on using AI to support regulatory decision-making in drug and biologic development, signaling that even drug approvals might one day rely on ML/AI-aided analyses[9]) For now, any AI that is directly diagnosing, treating, or preventing disease is considered a medical device and goes through one of the device pathways (510(k), De Novo, or PMA depending on risk).

Across the Atlantic, the European Union is taking a more sweeping approach. The EU already had its Medical Devices Regulation (MDR) go into effect in 2021, which substantially raised the bar for medical device approval in Europe (including software). Under the MDR, many software-based medical devices (like diagnostic AI) are classified as higher risk than before, meaning they require a rigorous conformity assessment by notified bodies (third-party reviewers) before they can get CE marking. On top of this, the EU has introduced the Artificial Intelligence Act (AI Act), approved in 2024, which is the world's first comprehensive legal framework for AI. Under the AI Act, most medical AI systems will be deemed "high-risk AI" by definition (medical devices are specifically listed as high-risk AI systems).[10] This triggers a whole set of additional requirements. For example, the AI Act mandates that high-risk AI developers implement risk management, documentation, data governance, transparency, and human oversight for their AI systems.

Concretely, if you are making, say, an AI-powered ECG interpretation software in the EU, you now have two overlapping regulatory hurdles: you must comply with MDR (prove safety and performance as a medical device) and comply with the AI Act's requirements for high-risk AI. The AI Act's obligations will fully apply 36 months after its entry into force for medical devices, giving companies a transitional period.[11] But they are coming. And they are extensive. A recent analysis in npj Digital Medicine noted that the technical documentation required by the AI Act for a medical AI system is substantially more detailed than what the FDA might require for a 510(k) clearance in the US.[12] Developers will need to provide transparency documentation explaining how the AI works, how it was trained, how biases were addressed; they'll need to implement post-market monitoring for AI-specific issues, and ensure a means for human override or intervention is in place.

From a regulatory perspective, the EU is formalizing the principle that higher risk uses of AI demand strict oversight. All those categories we parsed – detection vs diagnosis vs autonomous – in Europe they all likely fall under "high-risk" if they're part of a medical product, but the specific compliance steps might be somewhat tuned to the exact use. The AI Act also introduces the concept of General Purpose AI (like a large language model that could be repurposed to healthcare) and has provisions for those, meaning even something like using GPT-4 in a medical setting might invoke regulatory scrutiny unless carefully sandboxed.

It's a lot for companies to navigate. The MDR has already been challenging – many smaller medtech companies complained of higher costs, more paperwork, and delays in getting products approved under the new regime. Now the AI Act layers on top of that. There's concern that this could stifle innovation or at least slow down the availability of AI tools in Europe.[13] Regulators are aware of this tension; the AI Act discussions have included flexibility mechanisms and grace periods. And not all jurisdictions are following the EU's heavy regulatory approach – notably, the UK is opting for a more "light-touch, pro-innovation" framework, diverging from the EU model. But the bottom line is: regulation is now playing catch-up to technology, trying to ensure patient safety without smothering the promising advances. In the US, the FDA continues to clear AI devices at a steady clip while issuing guidance and updates to adapt criteria. In the EU, a more prescriptive regime is being built, which will require companies to be much more rigorous in proving their AI is trustworthy before it ever reaches a patient.

The turbulent present

In the US, we now find ourselves in the second Trump era, featuring a Republican Congress and a Republican President, a new department devoted to eliminating 'government waste' (or political enemies), and new agency heads that are antagonistic to the status quo. It's a period of uncertainty. In my conversations with healthcare founders and FDA regulatory consultants, I've heard two markedly different takes on what the next couple of years will bring.

One camp is optimistic. They argue that right now is the golden window to push AI innovations into healthcare practice. Their reasoning is that the recent changes are removing unnecessary friction from the regulatory process. This camp points out that the regulatory rules today are actually workable and the hostility to further regulation is likely to keep them so. "Get your AI device approved in the next year or two" was the strong advice of one consultant.

The other camp sees incompetence and chaos. They argue that the changes brought about by the new administration may make it much harder to get AI devices approved — not because of new laws, but because of a creeping institutional malaise. Their concern isn’t about what the rules say, but about who’s left to enforce them and whether the agencies can function at all. The vacuum of technical leadership, these skeptics say, leaves reviews vulnerable to inconsistency and arbitrariness. Even well-prepared submissions might flounder in a system without the capacity to review them in a timely or coherent way.

It’s easy to be swept up by the future — shimmering product demos, perfect benchmarks, the dream of healthcare made intelligent and instant. But we don’t build futures in a vacuum. We build them in institutions, with fallible people, inside legal regimes and political moods. The next few years will be a stress test not just for AI in medicine, but for the bureaucracies we’ve built to manage complexity, risk, and public trust.

If history is any guide, technology will outpace the rules — and then the rules will catch up, often imperfectly. Whether this moment becomes a breakthrough or a backlash depends not just on the models, but on the mechanisms we use to govern them. And on whether those mechanisms are still standing when we need them.

https://qz.com/2016153/ai-promised-to-revolutionize-radiology-but-so-far-its-failing ↩︎

https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices ↩︎

https://www.fda.gov/regulatory-information/search-fda-guidance-documents/considerations-use-artificial-intelligence-support-regulatory-decision-making-drug-and-biological. ↩︎